For a full list of BASHing data blog posts see the index page. ![]()

What is +ACY- doing in the data?

Almost all the data files I audit are in UTF-8, but the files have often started out in other encodings. This can lead to some hilarious mojibake and loads of fun for me as I try to reverse the encoding conversion failures.

Last week a file appeared with mojibake I'd never seen before. Here are the original characters followed by the character strings as they appeared in the audit file:

Á --> +AME-

á --> +AOE-

& --> +ACY-

é --> +AOk-

í --> +AO0-

ó --> +APM

ö --> +APY-

ü --> +APw-

After some googling I found the culprit, namely RFC 2152. This 1997 recommendation (not a standard) defines "UTF-7" and is now regarded as obsolete. UTF-7 was designed to be an email-safe way to handle Unicode characters by converting them into 7-bit US ASCII strings.

To show how UTF-7 works, I'll encode the Latin capital letter a with acute, "Á", Unicode U+00C1.

- In UTF-16, U+00C1 is 0000 0000 1100 0001

- Starting from the left, group the UTF-16 binary in lots of 6 bits:

000000 001100 0001 - If the last lot has fewer than 6 bits, pad it out with trailing zeroes:

000000 001100 000100 - Read each 6-bit lot as if it was in Base64: A M E

- Add a leading "+" to indicate that the block is

UTF-16-modified-then-Base64-encoded-and-tweaked - Add a trailing "-" to indicate the end of the block

To decode a small number of UTF-7 strings, I recommend the excellent string-functions.com Web page with a UTF-7-vs-US-ASCII table.

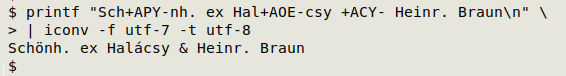

To convert a block of text riddled with UTF-7 constructions, the best bet is iconv on the command line:

A UTF-7 code block is an example of a reversible replo: it's easily fixed by changing the encoding of the file.

Last update: 2021-07-14

The blog posts on this website are licensed under a

Creative Commons Attribution-NonCommercial 4.0 International License