For a full list of BASHing data blog posts see the index page. ![]()

Extra commas in a CSV

Every once in a while I'm given a haunted CSV to audit. I call it "haunted" because it looks like it contains the ghosts of data items that might once have been entered in right-hand-side columns and bottom-most rows. All that remains of the missing entries are long strings of commas. Below is a simplified example, called "ghost". Please note that field 2 in the second record and field 3 in the third record have legitimately blank entries (after the highlighted comma).

Fld1,Fld2,Fld3,,,,,,,

aaa,"b, b, b",ccc,,,,,,,

ddd,,"ff, f",,,,,,,

ggg,hhh,,,,,,,,

,,,,,,,,,

,,,,,,,,,

I don't know how CSVs come to be haunted, although I've seen reports on the Web (like this one) that say Microsoft Excel can do it. I've tried to reproduce the behaviour with LibreOffice Calc (version 6.1) and Gnumeric (version 1.12) spreadsheets, but I can't. In both cases the application only exports the visibly filled cell range to CSV, even though deleted entries are still in memory and can be reinstated with "Undo".

Cleaning out the unwanted commas. This isn't as straightforward as it looks, because some end-of-line commas might be valid field separators. A practical strategy is to

- count the number of trailing commas in the header line

- delete that number of commas from the end of every line

- delete any lines that consist entirely of commas

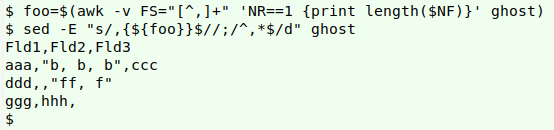

There are various ways to do these three jobs with shell tools. I'll count with AWK and delete with sed:

awk -v FS="[^,]+" 'NR==1 {print length($NF)}' ghost

foo=$(awk -v FS="[^,]+" 'NR==1 {print length($NF)}' ghost)

sed -E "s/,{${foo}}$//;/^,*$/d" ghost

The AWK command looks only at the header line (NR==1). Field separators are defined as "one or more characters that aren't commas" (FS="[^,]+"). The three field separators in the header are therefore "Fld1", "Fld2" and "Fld3", and the four fields they separate are "" (empty string before separator "Fld1") / , / , / ,,,,,,,. AWK is told to print the length (in characters) of the last field (print length($NF), which is seven. This number is stored in the shell variable "foo".

GNU sed is used with the option "-E", which allows extended regular expressions. With the first command (s/,{${foo}}$//), sed deletes strings of seven commas leading up to the end of the line, where "seven" is represented by the shell variable "foo". With the second command (/^,*$/d) sed looks for and deletes any line containing zero or more commas and nothing else between line start and line finish.

Other extra commas. Related to haunted CSVs are certain CSVs that contain metadata: "explainer" lines that aren't part of the rows-and-columns data matrix. Sometimes the metadata appear in an extended header, like this:

eventDate is the IS0 8601 date of the occurrence.,,

recordedBy is the name of the bird recorder.,,

individualCount is the number of birds observed.,,

eventDate,recordedBy,individualCount

2019-05-26,A. Samson,11

2019-05-29,A. Samson | B. Mannheim,5

2019-06-03,C. Spry,8

I don't mind seeing metadata lines placed like this at the top of a table, because I can begin my data processing simply by specifying the table's start line, in this case line 4. What I find annoying, especially in long tables, is metadata lines placed at the table's bottom after a series of data-free "spacer" lines:

eventDate,recordedBy,individualCount

2019-05-26,A. Samson,11

2019-05-29,A. Samson | B. Mannheim,5

2019-06-03,C. Spry,8

,,,

,,,

,,,

eventDate is the IS0 8601 date of the occurrence.,,

recordedBy is the name of the bird recorder.,,

individualCount is the number of birds observed.,,

This might have looked OK in the spreadsheet from which the CSV was exported, but it's a nuisance in processing tables with many records before the ",,," etc. A workaround is to move those last lines to the head of the table as an extended header, and to delete the spacer lines.

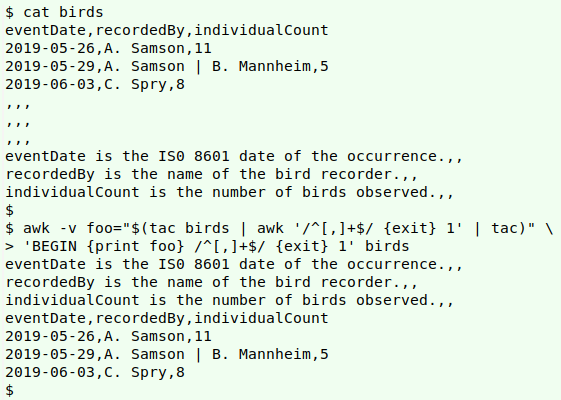

For most such tables I do the moving and deleting in a text editor. Here's a quirky command-line method for the file "birds":

awk -v foo="$(tac birds | awk '/^[,]+$/ {exit} 1' | tac)" \

'BEGIN {print foo} /^[,]+$/ {exit} 1' birds

The command processes "birds" twice. In the first pass, tac reverses the line order. The result is fed to AWK, which while printing all lines (1) is looking for a match to /^[,]+$/, namely a line composed entirely of commas. When it finds the first such line (line 4 in the reversed "birds"), AWK quits (next) and the reversed metadata lines are passed to tac to put them in the correct order.

The captured metadata lines are stored in the AWK variable "foo". AWK processes "birds", but first prints out the metadata lines (BEGIN {print foo}). AWK is again told to print all lines in "birds" and again told to exit when it finds the first line composed entirely of commas.

The command is a little quirky because it uses the same AWK instructions twice! (/^[,]+$/ {exit} 1)

That command won't work if there are spacer lines between metadata lines. In cases like that I use a text editor to do the moving and deleting, and mutter quietly to myself ("Mumble, mumble...dingbats...mumble").

Last update: 2020-07-08

The blog posts on this website are licensed under a

Creative Commons Attribution-NonCommercial 4.0 International License